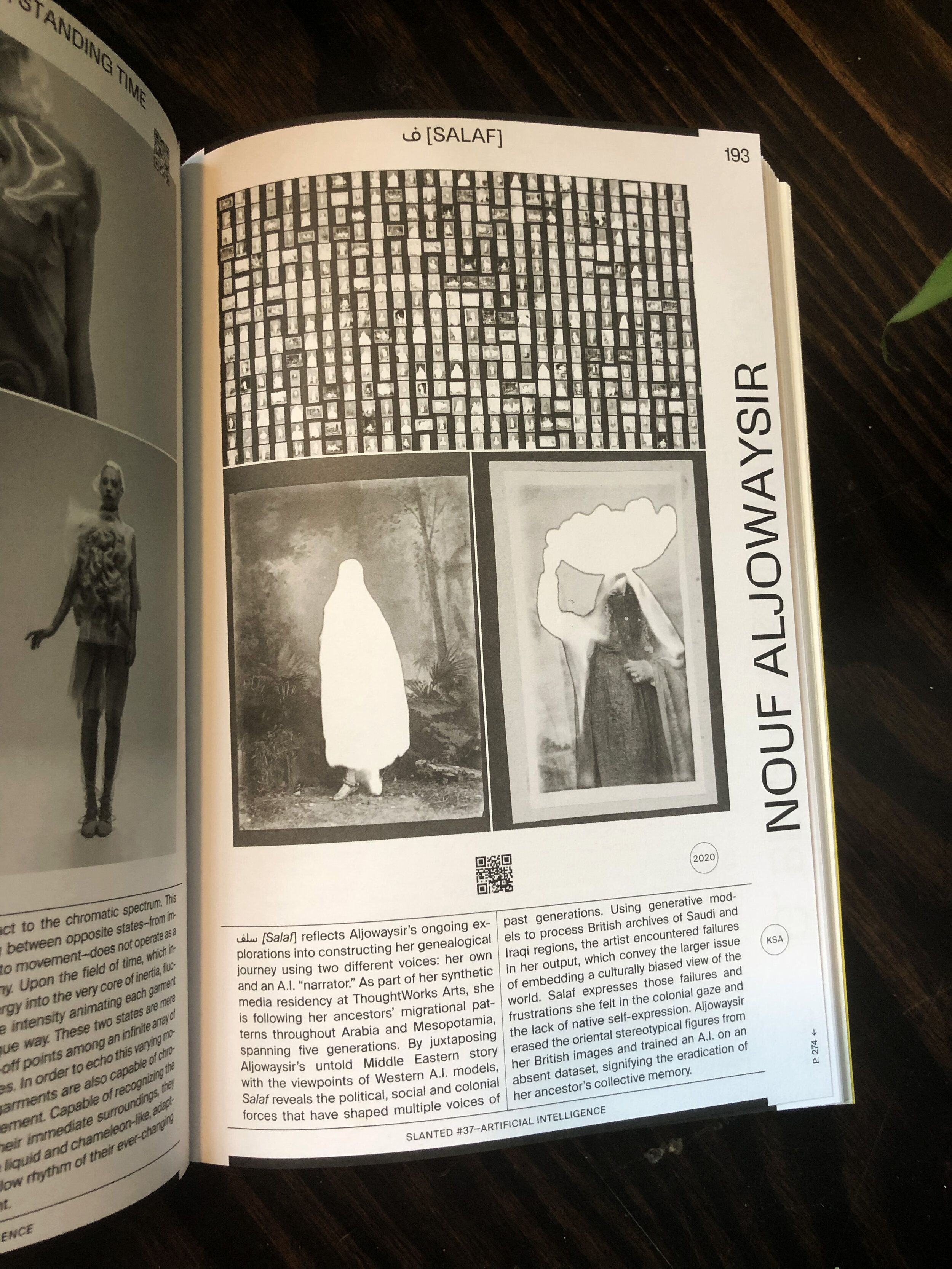

Salaf (Ancestors)

Year: 2020

Residency: ThoughtWorks Arts

Project: AI-Generated Media

A series of visual portraits symbolizing the limitations and failures of artificial intelligence in reconstructing and interpreting my culture and identity.

* East Window

* Palmer Gallery

* NeurIPS Conference for Creativity and Design

* Fisheye Magazine

* Slanted Magazine

* The Conference on Computer Vision and Pattern Recognition (CVPR)

Synopsis

In the summer of 2020, I began exploring her genealogical journey through a personal and an AI lens. I interviewed family members and began following the migrational patterns of my ancestors across Arabia and Mesopotamia spanning five generations.

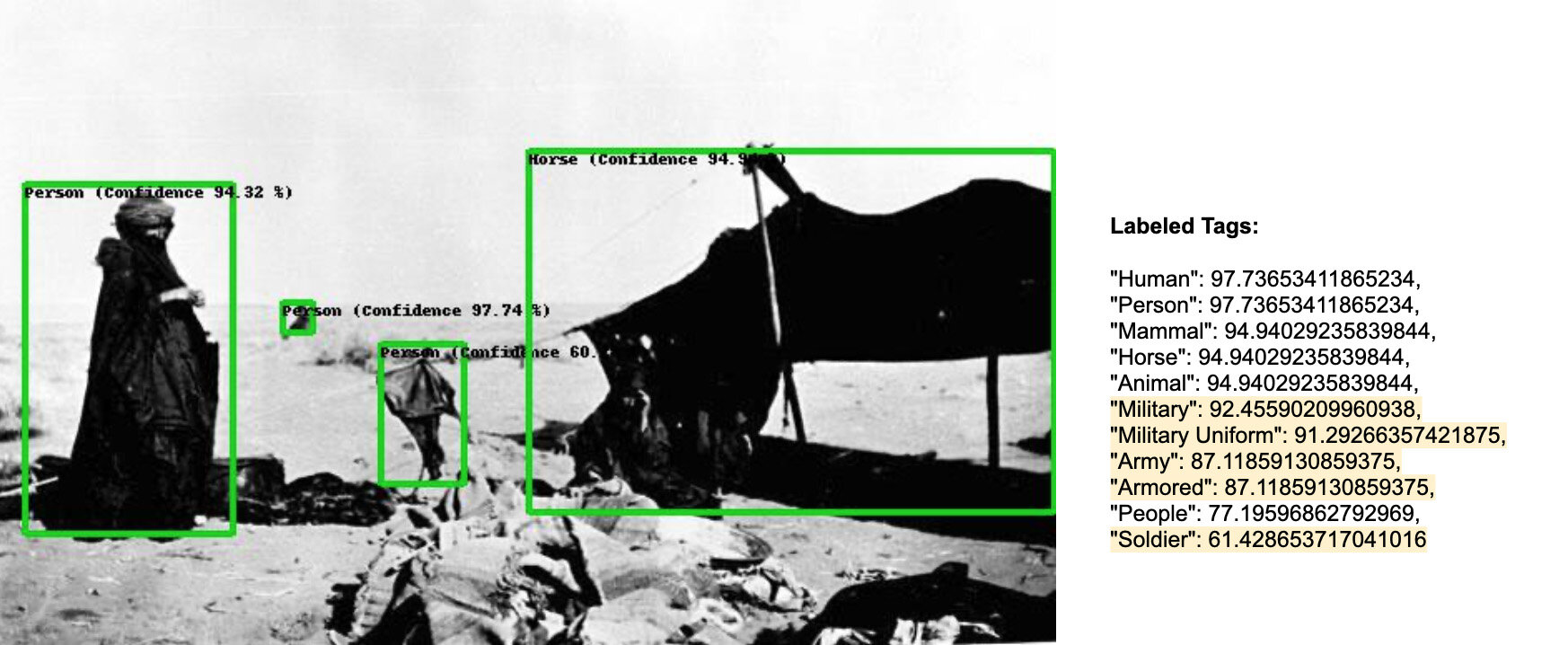

AI Failures

As I juxtaposed my story with the viewpoints of Western AI systems, I encountered a narrative widely different from my personal one. The models failed in recognizing the nomadic bedouin faces in my stories and even regurgitated familiar stereotypes and cliches about the Arab world.

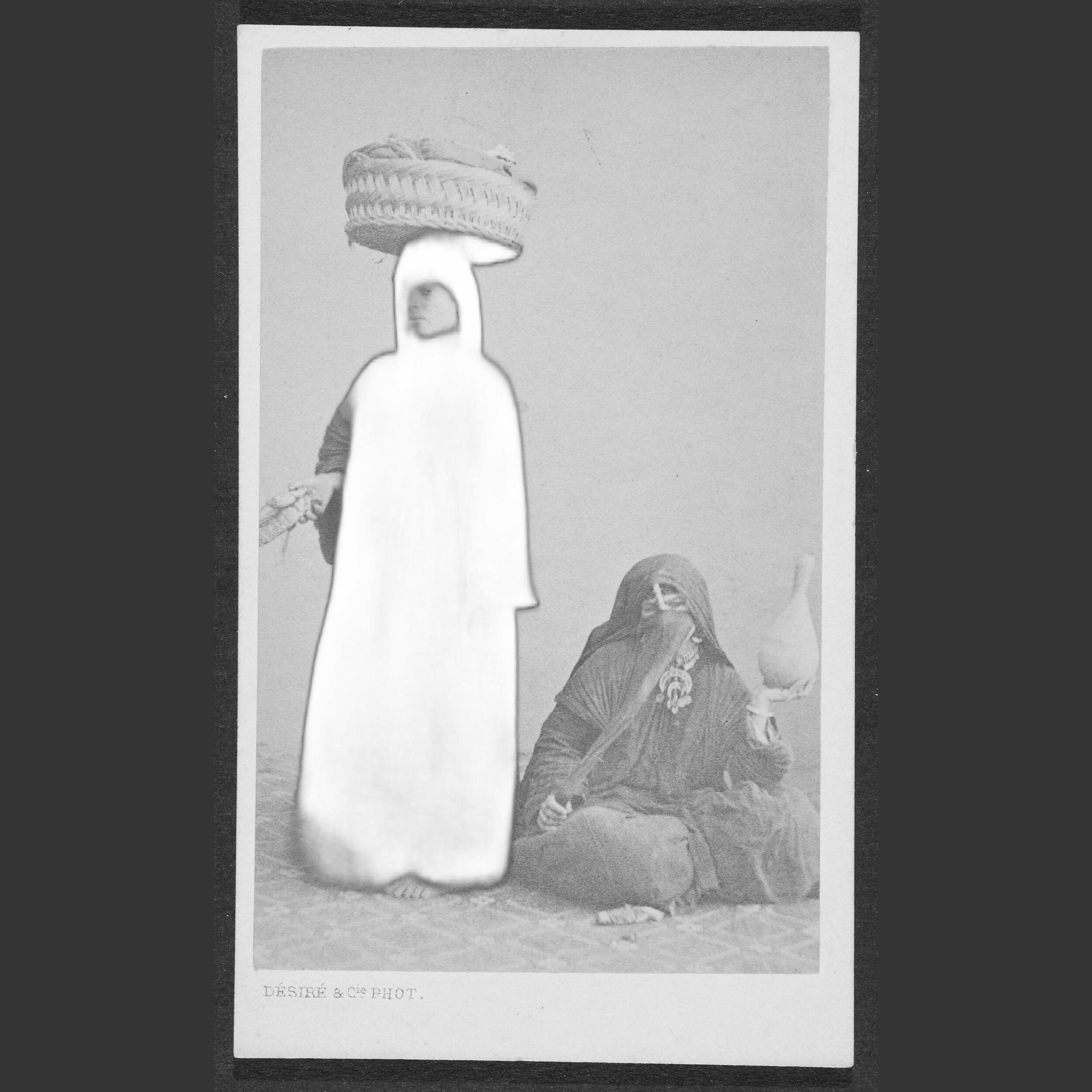

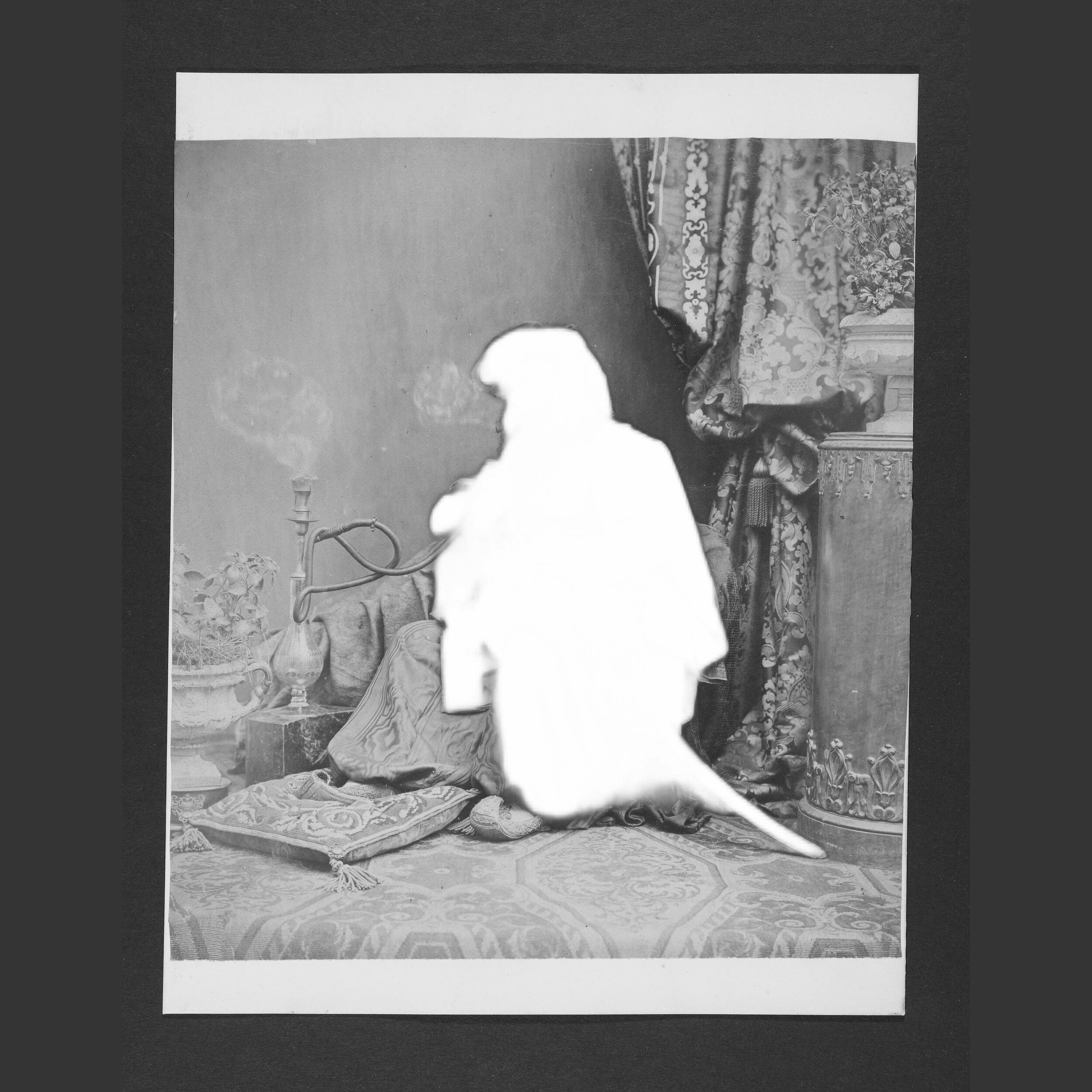

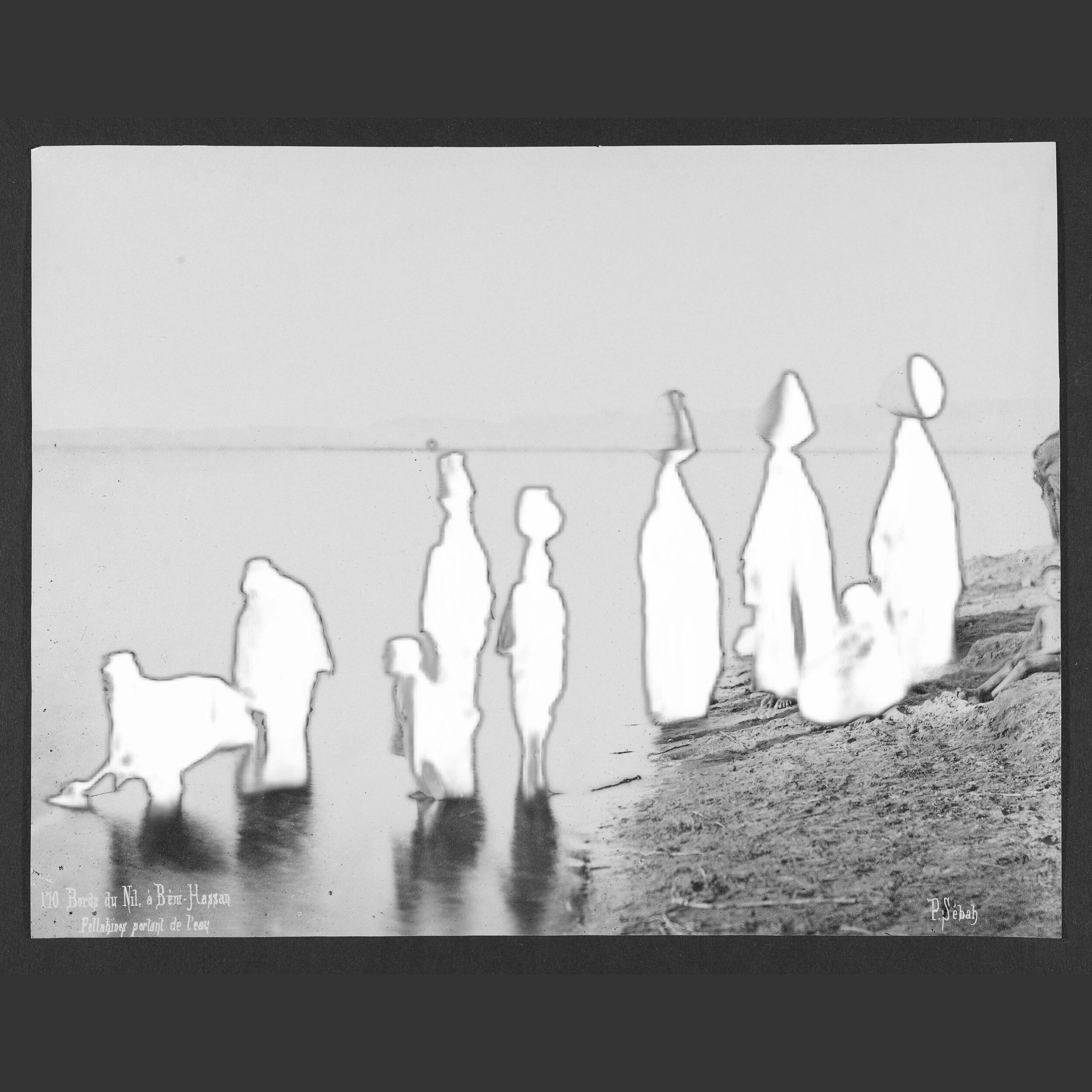

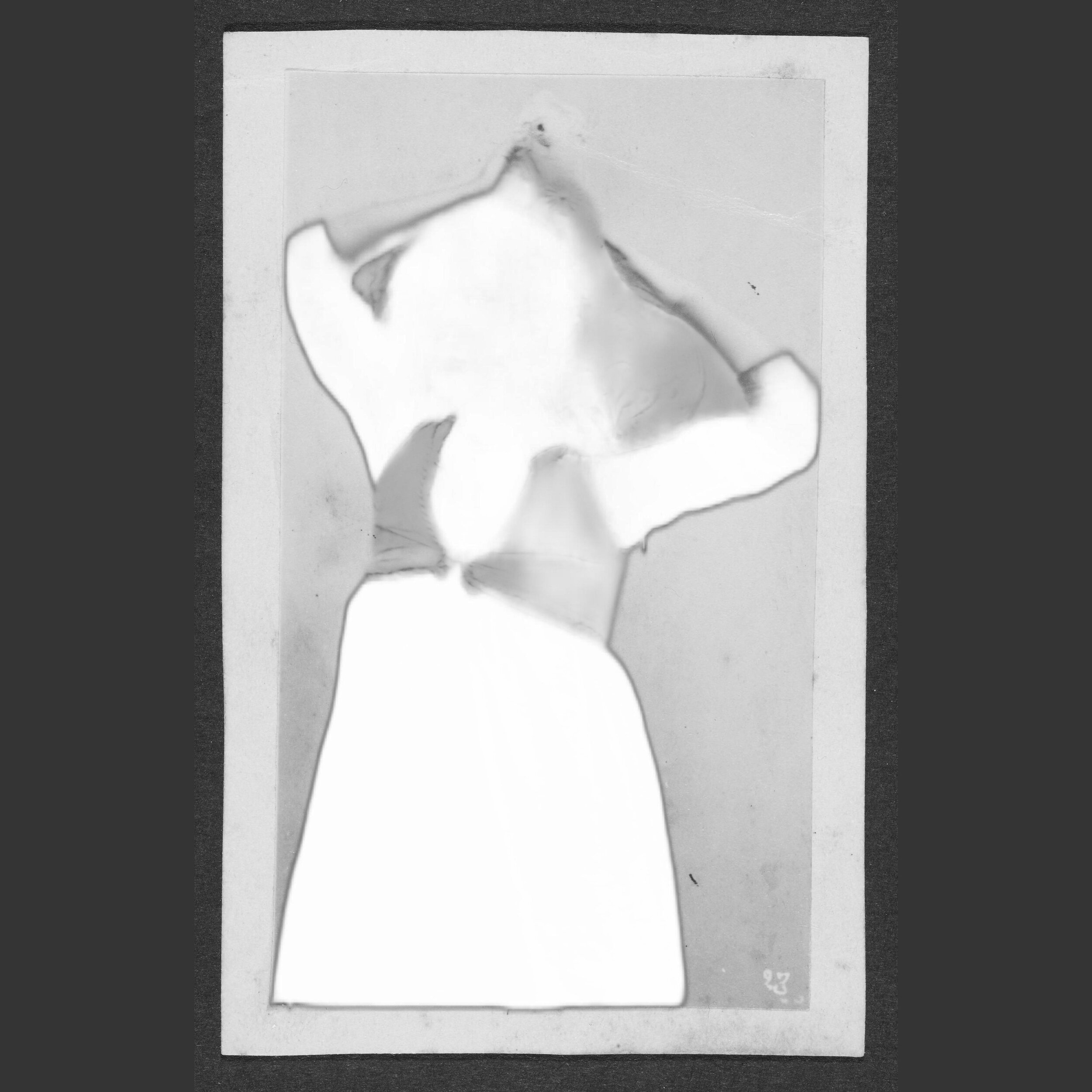

While researching and collecting digital archives relevant to my family's stories, I discovered that the only visual records lacked indigenous self-expression, and emanated from a British Empire that purposefully crafted the East (i.e., the "Orient" or "Other") to be exploited and controlled. As I tested these images with computer vision AI models, this process revealed “failures” manifested as mislabeling, generalizations, and stereotypes. They failed to recognize the majority of veiled women as women. They haphazardly tagged several bedouin images with modern-day warfare labels such as "soldier, "army," and "military uniform," confidently asserting high confidence values in their evaluations. These “failures” uncovered not only the prejudice systemically-embedded within commercial AI tools, but the broader problematic results that arise from using a historical training dataset that lacks an understanding of "Middle Eastern" imagery.

Salaf symbolizes my frustrations with the Western colonial gaze and the lack of native localized self-expression. I deliberately erased the “oriental” stereotypical figures from my datasets, using an AI segmentation technique called U-2 Net, and trained an AI on this “absent” dataset. These deliberate reversals of technology's functions symbolize the erasure of my ancestors' collective memory while confronting AI's reduction of history and identity.